It’s been 6 years since the last published post on this blog. Many things have happened since, among them the spin off of another website for my photography, which sees marginally more activity than this one. I’ve also refocused my interests, spending a lot of my free time researching the architectural history of libraries, which have become the near unique subjects of my images.

In late 2021, this obsession of mine with library architecture led me to participate in the Toronto Society of Architect’s yearly Gingerbread City baking competition, submitting a model of the Monique-Corriveau library in Québec City. This was a lot of work, but also a lot of fun. My main inspiration was the previous year entry by now TSA Executive Director Joël León Danis, whose yearly gingerbread creations are of a different level entirely.

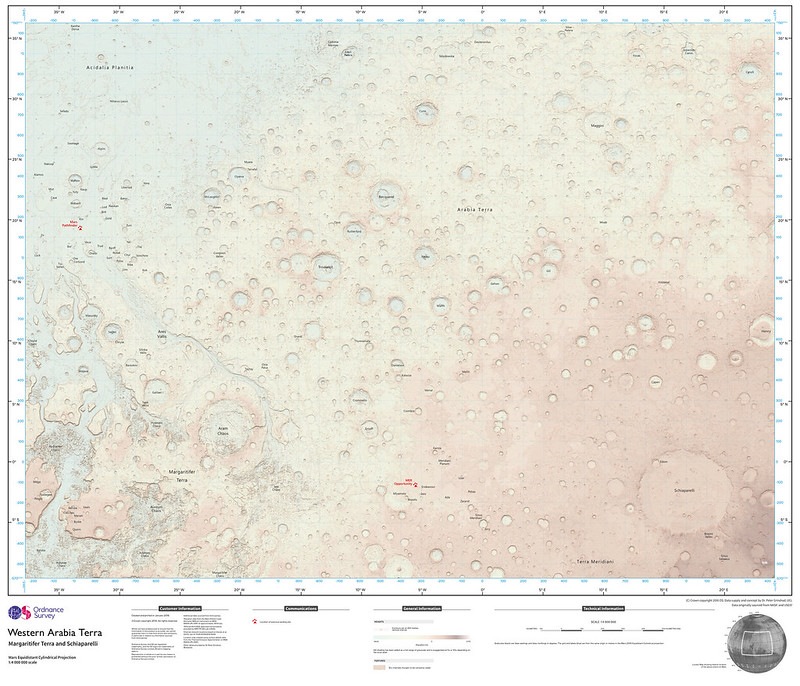

In 2022, my entry to the same competition was a model of the Beinecke Rare Book and Manuscript Library at Yale University.

In 2023, I baked a gingerbread and isomalt version of the Halifax Central Library that I had visited earlier that year for my photography project.

The best part of the TSA yearly competition is chatting with my fellow contestants (it’s not really a contest, there are no prizes), exchanging tips and techniques. This is what motivated me to resuscitate this blog to document my gingerbread engineering attempts so far, in the hope that they can be useful to others but mostly so that I can remember them myself.

Planning

Like all my projects, I like to start my gingerbread builds by doing some research, finding blueprints and good reference photos of all angles of my source buildings. Blueprints are helpful in getting the proportions right, but will likely need to be massively simplified in order for the model to translate well to gingerbread. From them, I can determine the target scale of my gingerbread model and calculate dimensions accordingly. An important factor to remember is that the gingerbread pieces will be pretty thick (5-8mm or so), this needs to be factored in to the calculations.

For more complex shapes, I’ve found that starting with a simple cardboard scale model helps designing the individual pieces of gingerbread that I will need to bake. Contrary to the images below where I used some discarded cereal boxes, I recommend using thicker corrugated cardboard instead, as it’s closer to the actual thickness of gingerbread or candy glass plus the frosting “glue”. For this project, using thin cardboard led me to underestimate wall thickness and I had to remake the roof pieces a second time.

Complex shapes can then be either traced from the model pieces, or derived from the blueprints and then drawn or printed on paper to make guides for cutting gingerbread. Simpler, more geometric shapes can be directly measured on gingerbread without the need for guides.

Depending on the complexity of the build, internal support pieces may be required, those should be planned for as well.

Something that I always forget at this stage is plan where my lights will go through. This is not a huge deal, as holes can always be drilled in the baked pieces later on for wiring, but I’d like to be more mindful of this in future builds.

Finally, I tend to err on the safe size and always bake a few extra “beams” that help me add support where needed during the construction phase.

Materials and techniques

When it comes to gingerbread architecture, I’m a bit of a purist and try to use mostly materials that I make from scratch. These include

- Gingerbread (walls and internal structure)

- Tuile (translucent walls – like those of the Beinecke library model)

- Glass candy (windows)

- Royal icing (glue and for piping objects such as trees)

Other materials that I’ve found useful:

- Pretzel sticks and grissini (support beams)

- Candy for decor (used parsimoniously)

Gingerbread

The recipe below results in what I like to call “structural gingerbread”. It’s not really tasty, especially when slightly overbaked, but it’s fairly sturdy. Also it uses shortening instead of butter since it will largely go uneaten and at Canadian dairy prices, I’d rather not waste any.

- 1 1/2 cup shortening

- 1 1/4 cup sugar

- 2/3 cup fancy molasses

- 3 eggs

- 7 cups flour

- 1TBsp cinnamon

- 1 1/2 TBsp ginger powder

- 1 1/2 tsp baking soda

- 3/4 tsp salt

- Cream shortening and sugar in the stand mixer (paddle)

- Keep the mixer running at low speed, gradually add molasses and eggs

- Combine remaining dry ingredients in a bowl then add to batter gradually

- Place dough in saran wrap and refrigerate for a few hours

- Roll dough between sheets at 7mm. 4mm is feasible but will be less sturdy, use for decorative, non-structural pieces and cut to shape.

- Bake at 190°C for about 10 minutes, until brown – err on the side of overbaking

I use my trusty Betty Bossi “Teighölzli” to achieve consistent thickness for my dough, but you can use rulers or pieces of wood instead.

Gingerbread needs to be cut to shape before baking. This can easily be done using a knife, spatula or pizza wheel. I like using a small spatula, as its blunt end doesn’t damage my baking paper like a knife would. I revert to a knife for more delicate pieces. The pizza cutter is great for even straight lines.

For geometrical pieces, I don’t use tracing paper at all but instead measure out the dimensions and cut them using my dough spacers or a ruler as guide.

Before baking, texture can be added to the gingerbread by using silicon moulds or by scoring it lightly, for example to mimic concrete forms or texture concrete, paved surfaces, tiles, etc. However, all horizontal surfaces will likely disappear if I’m aiming for a “snow effect” layer of frosting, so I usually don’t bother texturing those.

Curved pieces are trickier to achieve. It may be possible to quickly bend the warm dough as it comes out of the oven. My best results were achieved by baking my dough on cardboard forms (glued together with icing to prevent any glue fumes in the oven).

Despite my best efforts at baking all shapes to size, pieces tend to shift a little while baking. Missing material can easily be compensated by using a bit more icing at the joints, while extra material can be carefully “sanded” down using a microplane. Sandpaper can be used for fine corrections, but it tends to get clogged with gingerbread dust, so I don’t use it for bigger jobs.

It’s always a good idea to plan spares for especially as accidents do happen. I usually bake an extra set of the more complex shaped pieces, in case one breaks. I then cut the leftover dough in a variety of rectangles and beams, to be used in case I need additional support during construction.

Tuile

I used tuile to recreate the translucent marble panels of the Beinecke library and was blown away by the results!

Contrary to the gingerbread above, these tuiles are pretty tasty. Which comes in handy as it’s a very brittle material and quite likely to break during construction. For this reason, it’s advised to bake some spares. Broken or leftover tuiles are delicious!

- 2 egg whites

- 125g icing sugar

- 60g flour

- 1tsp vanilla extract or other flavour (e.g. almond)

- 60g butter, melted and cooled

- Flaked almonds (optional)

- Whisk egg whites a little in a bowl and add sugar, whisk more until frothy (but not stiff peaks, we’re not making meringue)

- Stir in flour and vanilla extract, then add melted butter.

- Mix to a smooth batter. It should flow slowly down a spoon, kind of like pancake batter

- Spread batter through templates cut from acetate sheets (very thin) onto lined baking trays, then remove template

- Bake at 180°C for 7-8 minutes (until the edges turn golden) – do not overbake!

- Working quickly, remove the tuiles with an offset spatula and roll or form them if needed

(Recipe adapted from Tales from the Kitchen Shed)

Tuiles don’t keep for a very long time as they absorb moisture, but they can last one night on a cooling wire. The batter keeps for a few days in the fridge if needed.

Forming tuiles is done by spreading the batter in a very thin layer using forms of acetate sheets cut to the desired shapes. Since I didn’t have acetate sheets on hand, I used a set of ugly old placemats, in which I cut the shapes of the panels I needed.

Tuile panels are very thin and fragile, so they can definitely not be used as freestanding walls. Instead, think of them as the curtain walls of gingerbread architecture: affixed with a little quantity of icing to a support structure of gingerbread slabs.

Edit: It later occurred to me that host cuttings sheets that for some reason are ubiquitous in Québec corner stores would also make a great translucent material.

Glass candy (or sugar glass) recipe

The following recipe (adapted from the nerdy mamma blog) makes perfectly serviceable sugar “glass” that can be used for gingerbread windows, without the use of exotic ingredients. The downside is that the sugar needs to be cooked to a certain temperature for it to work, during which it’s very hard to keep it from caramelizing a little. If this happens, the candy will not be perfectly clear but will take on a slight yellow colour. This year, I plan to try using isomalt instead, which does not require such high temperatures and should allow better colour control.

- 2 cups granulated white sugar

- 3/4 cups water

- 2/3 cups light corn syrup

- Food colouring (optional)

Warning! Boiling sugar is much hotter than boiling water, also it sticks. Be very careful not to spill or splash any of it on your skin.

Make sure your forms for pouring glass candy are ready before starting the recipe as you won’t have time once it’s ready to pour.

- Mix sugar, water and syrup in a small saucepan and heat gently, stirring to combine.

- Once the sugar is fully dissolved, increase the heat and bring to a boil, stirring constantly.

- Using a candy thermometer (ideally) or an instant read thermometer (not ideal but OK), cook until the mixture reaches 150°C (300°F) – known as the “hard crack stage”.

- Once the target temperature is reached, immediately remove from heat and pour into forms.

- Allow to cool for 2 hours (cover to avoid disturbing the glass).

Glass candy can be formed either by filling holes in previously baked pieces of gingerbread, or by pouring into moulds made of baking paper.

The paper forms worked quite well. Bubbles can apparently be removed by quickly blowing a small torch on the surface of the glass before it sets, but I’ve never tried it.

The result is quite strong and can be used as structural elements. I used icing to bind them in place, but I think two pieces could also be stuck together by blowing the contact areas with a torch and affixing them together, a technique I plan to try in the future. Pieces can be carefully sanded down to size if needed, but be careful not to break them.

As said earlier, making glass candy from sugar is tricky as it can easily caramelize, resulting in a yellow colour. This year, I’m planning to use isomalt instead and will report back with my results.

Royal icing recipe

This is the “glue” that will stick the pieces together. It can also be used to pipe decorative elements, such as icicles, trees, candy canes, etc. if coloured.

I’ve found that royal icing, which includes egg white, tends to make sturdier and more solid “glue” than a simple sugar and water mixture. The latter can however be used in a pinch for small touch ups.

- 3 egg whites

- pinch of salt

- 500g icing sugar

- Beat the egg whites to stiff peaks (the pinch of salt helps kickstart this process)

- Gradually add the icing sugar until the desired consistency is reached.

- Add a few drops of food colouring if required

- Transfer into piping bags

Fresh icing will gradually dry up, so minimize air exposure if you need to store it for more than 30 minutes. Either keep it in closed piping bags, or lay a sheet of saran wrap on top of your bowl of icing. Ideally you don’t want to keep icing standing for too long (it has raw egg in it). If baking in stages, I recommend making smaller batches for each stage.

Buttercream frosting

This simple frosting sticks well to all surfaces and can be applied in the desired thickness to mimic snow on roofs and the ground.

- 1/2 cup shortening

- 2 cups icing sugar

- 2 TBsp milk

- Cream shortening in a stand mixer.

- Gradually add sugar.

- Finish with the milk and continue beating until the desired consistency is reached. Add more milk if too solid or more sugar if too liquid.

This can either be spread using a spatula or piped.

Construction techniques

When all the pieces are finished baking and the icing is ready, it’s time to start building the model! I like to use a piece of plywood as a base, but you can also use a tray, a serving plate or a piece of wrapped cardboard.

I like to light up my models by adding string LED lights, so I’m careful to drill a hole in my base to file them through, and be mindful of where I want there to be light when building the model.

For the sunken court of the Beinecke library, I first constructed a raised baseplate with a hole for the sunken court. This also created an empty space underneath the library where I planned to stash the battery box for the lights, but it ended up to be too small.

This gross simplification of the actual plaza was scaled to match the serving tray I used as a base, from which the other dimensions were all calculated. Keeping the plans on hand was useful to correctly position the library in relation to the court.

An assortment of glasses, bowls, chopstick rests and other cutlery was used to keep the pieces in place while the icing was setting.

Once the construction is complete, the last step is to add a layer of icing to mimic snow, and to finish decorating. I like a minimalist approach to my designs and thus don’t go overboard with the coloured candy decoration, but that’s just a matter of taste.

I’ve found that just adding powdered sugar ran through a sieve can work very well to add a layer of snow to horizontal surfaces. To get it to stick to the sloped roof of the Monique-Corriveau library, however, I first spread a layer of buttercream frosting before finishing off with a dusting of icing sugar.

Conclusion

Here’s everything I’ve learned so far in the delicate art of gingerbread architecture. I hope to continue adding to this post as I keep experimenting.

For more inspiration, I recommend checking out Joël’s Instagram stories, as well as previous TSA Gingerbread City entries. There’s much to learn from such talented gingerbread architects!

Also, if you don’t already know about the Monique-Corriveau library, go have a look at it on my photo blog. It’s a converted 1964 church designed by Jean-Marie Roy, transformed into a library in 2013 by Dan Hanganu and Côté/Leahy/Cardas, and it’s my favourite library in Québec City!

If you found this useful and have used any of the tips here, I’d love to see the results! Please get in touch if you care to share them.